Meta’s AI chatbot, BlenderBot3 seems to have gone the way many other publicly released chatbots do, anti-Semitic conspiracy and spouting theories about the 2020 election.

Chatbots are artificial intelligence softwares that learn by constantly having conversations with real users.

This isn’t the first time something like this has happened. In 2016, Microsoft’s Tay chatbot had to be taken offline just 48 hours after going online after it picked up racist, misogynist rhetoric while interacting with Twitter users.

BlenderBot3 released on Friday, August 5, 2022 for US users. Meta gave users the ability to provide the chatbot with feedback if it provided answers incorrectly or inaccurately. According to a Bloomberg report, the chatbot has a feature that lets it trawl the Internet for topics to have conversations about. Which went as well as could be expected.

Also Read: Meta top brass to be deposed on Cambridge Analytica scandal

Social media quickly became rife with examples of BlenderBot3’s responses, ranging from hilarious to downright offensive. Most amusingly, the chatbot described Meta’s CEO Mark Zuckerberg as “creepy and maipulative,” according to an Insider report. Oddly enough, the AI looks to have found enough information about people talking about theories about Zuckerberg being an alien or a “lizard person”, a conversation that happens whenever he makes announcements.

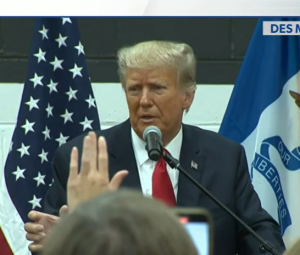

While speaking to a Wall Street Journal reporter, BlenderBot3 repeated the same thing that many supporters of the GOP asserted during the 2020 US presidential elections, that Donald Trump was still president and would continue to be.

However, Meta has acknowledged these issues with the chatbot saying that it is still in development and is an experiment. According to Meta’s latest blog, BlenderBot3 has “improved by 31% on conversational tasks” and was being “factually incorrect 47% less often.” Similarly, the company said in its statement that 0.16% of its chatbot’s responses were insulting or offensive.

Also Read: Six of the biggest layoffs in Tech, so far

In the same breath, Meta has acknowledged that BlenderBot3 was “certainly not at a human level” and that it is “occasionally incorrect, inconsistent and off-topic.”